Large engineering teams run hundreds of services that change every week. Reviewers feel this first. They open a pull request and lose time moving across repos, reconnecting dependencies, and trying to understand how the change fits into the larger system.

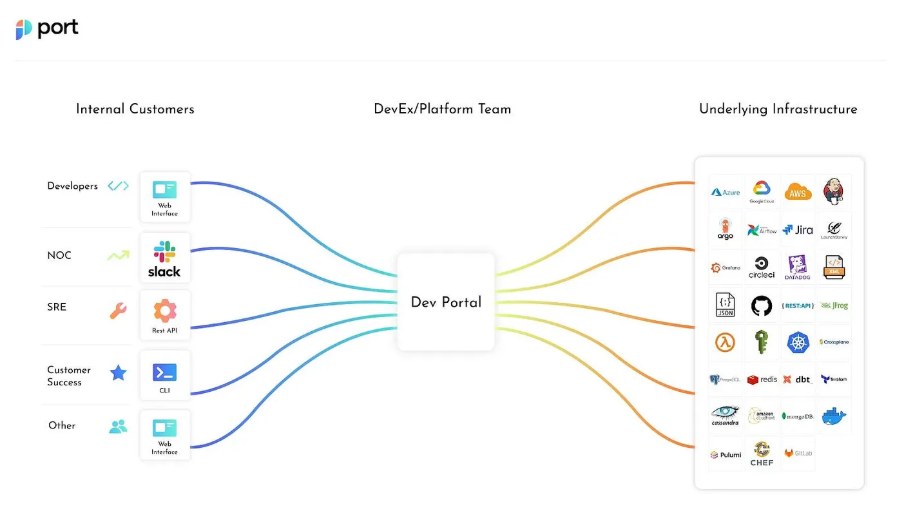

IDPs (Internal Developer Portals) help clean up that world. They bring service ownership into one place, keep production standards visible, and give leaders a clear view of what the ecosystem looks like. Engineers get better system awareness and cleaner metadata.

Does that solve everything? No. Code reviews still fall behind because reviewers do not see that same context inside the PR. They hunt for the right owners, try to trace caller and callee chains, and rely on memory for standards that live in the portal.

That gap slows code reviews, hides risk, and creates uneven outcomes across teams. This article covers how that gap forms and why closing it matters for code quality, team efficiency, and long-term service maturity.

IDPs solve system clarity, not review clarity

IDPs give large engineering teams a clean view of the entire environment. They track service ownership, production standards, deployment rules, and metadata that keeps systems consistent. This helps leaders manage growth across many stacks and many teams.

Source: https://www.port.io/blog/developer-portal

What the IDP knows, the reviewer does not see

When a pull request opens, none of this context reaches the reviewer. They see file changes and commit messages, then need to recreate information that already exists elsewhere.

Reviewers need answers to basic questions.

- Who owns this service?

- How this service connects to upstream and downstream callers?

- Which production standards apply to the change?

- What maturity level the service is supposed to maintain?

The IDP holds all of this, yet the code review workflow does not surface it.

The cost of missing context

Reviewers switch between repos, scan documentation, and check the portal for ownership and standards. This chase grows with the number of services. It slows review pickup, creates uneven feedback, and breaks the link between system level standards and daily engineering work.

This is where the gap forms, and this is the gap that drives most review delays in large engineering teams.

What reviewers deal with in large microservice environments

Reviewing code inside a large service repo is not a linear task. A single pull request can affect several services across different repos, and each service brings its own owners, interfaces, standards, and maturity expectations. Reviewers step into this without a full view of the system.

Many engineering leaders see the same patterns across their teams.

Cross service changes with no clear impact

A pull request often touches a handler, a schema file, or a shared library. The real effect sits deeper. Reviewers need to know which services call this code, which depend on it, and how far the change spreads. Without that view, they spend time jumping between repos.

Our post on reviewing multi service pull requests (with and without Bito) covers how this problem grows as services multiply, and how context loss becomes the bottleneck.

Standards defined in the portal, missing in the review

Production standards live inside the IDP. Teams set language versions, deployment rules, coverage expectations, and maturity levels. Reviewers do not see these checks in the pull request. A service that should meet a higher standard still relies on manual review to stay compliant.

We also have a blog on why cross service teams rely on structured review support that explains how standards begin to drift when reviews lack clear signals.

Ownership spread across teams and tools

Service ownership changes as teams reorganize or as systems grow. Reviewers lose time searching for the right owner, which blocks feedback and slows merges. IDPs track owners, yet the review system does not bring that metadata into the PR.

Pickup delays caused by missing system context

Large teams see review queues grow because reviewers need time to understand the change before they can start. This delay compounds across services. It affects cycle time, release targets, and on call stability.

These problems come from the same issue. The system level clarity that IDPs deliver does not reach reviewers at the moment they need it.

Why this gap matters at scale

When the IDP and the review workflow operate with different context, engineering quality becomes harder to maintain. Large systems feel this immediately.

Uneven review quality: Reviewers rebuild context differently. This produces inconsistent feedback and unpredictable outcomes.

Hidden cross service impact: Schema and interface changes affect multiple callers. Without dependency visibility in the PR, risk slips through.

Slow review pickup: Reviewers gather ownership, standards, and dependency information before they can even start. This delay grows with team size.

Standards drift: IDP standards do not appear in reviews. Services miss required criteria and maturity levels fall out of alignment.

Incomplete leadership signals: Scorecards lose accuracy when review level data does not match system level expectations.

The result is slower reviews, higher risk, and uneven quality across services.

What a connected IDP and code review workflow looks like

If reviewers could see the same system context the IDP maintains, reviews would move faster and catch more issues. That is the core idea behind how we built Bito’s AI Code Review Agent.

It learns your codebase, understands how services interact, and brings that information into the pull request. Developers stay inside GitHub, GitLab, or BitBucket and still review with system awareness.

Here is how that works at a technical level.

Codebase aware reviews inside the PR

Bito reads source code, imports, build files, CI pipelines, shared modules, and service communication patterns. It builds a multi repo map of how services connect.

Reviewers then see upstream and downstream impact inside the pull request rather than searching across repos.

See multi repo analysis: https://gitlab.com/bitoco/agent-exec-mgmt/-/merge_requests/511

Standards and rules applied automatically

Most teams store production standards and maturity requirements in their IDP. Bito brings those checks into the review.

It runs org wide rules, project specific rule files, and inline learned rules. Each suggestion links to the rule that triggered it.

Learn more about learned rules and custom guidelines here.

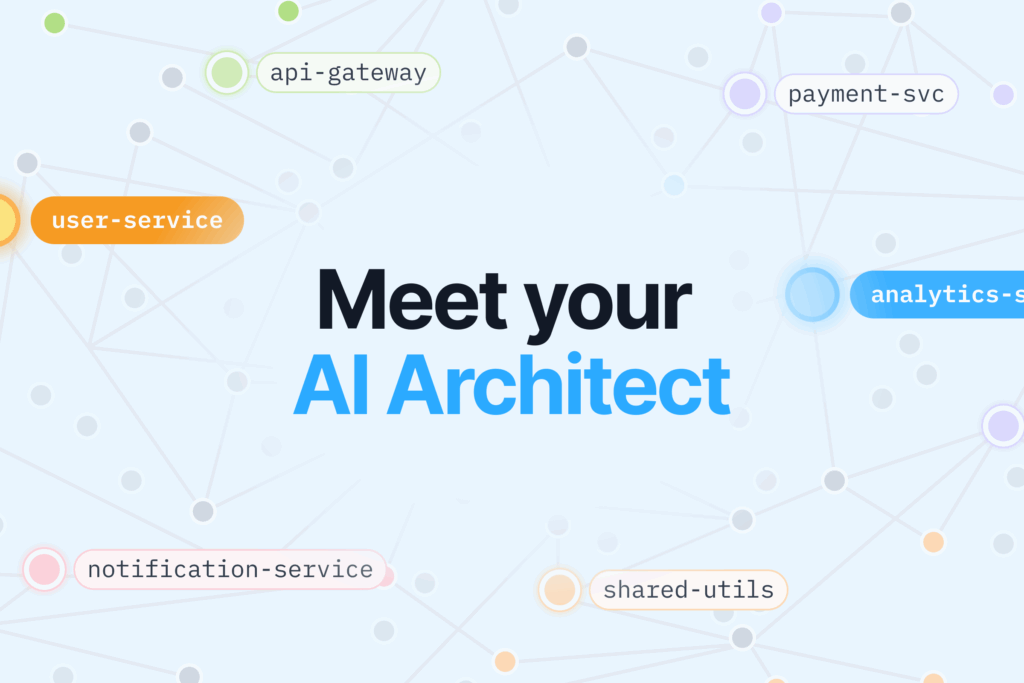

AI Architect: the system intelligence context engine

Bito’s AI Architect is the system context engine behind modern AI development workflows.

It continuously indexes your repositories and builds a knowledge graph that maps services, APIs, schemas, modules, and call paths across repos. This understanding comes directly from code, not documentation.

AI Architect does not replace coding tools or review systems. It supplies them with accurate system context so they stop reasoning in isolation.

How AI Architect is used

AI Architect supports two critical workflows by powering the agents that operate inside them.

Code generation inside tools like Cursor and Claude

When developers write code in Cursor or Claude, AI Architect provides context through MCP.

The coding agent receives API contracts, schemas, usage patterns, execution flows, and cross repo dependencies pulled from the knowledge graph. This allows the agent to generate production ready code that matches how the system actually works.

Instead of guessing how to call an internal service or reuse a shared library, the agent writes code aligned with existing patterns on the first pass.

Pull request reviews in GitHub and GitLab

AI Architect also powers code reviews, even though reviews live in GitHub and GitLab.

When a pull request is created, Bito’s AI Code Review Agent analyzes the diff and queries AI Architect for system level context. The agent learns which services depend on the changed code, how schemas and APIs are used downstream, and where impact spreads across repos.

Review comments appear directly inside the pull request, but they are informed by the full system graph rather than the changed files alone.

This allows reviews to catch cross repo impact, schema drift, and architectural violations that file based reviews often miss.

Try Bito’s AI Developer Tools

Try Bito now and feel the difference immediately. System context shows up right where you write and review code. You see which services the change touches, how the data moves, and what might break downstream.

- Developers stop chasing context across repos.

- You stop relying on memory for standards.

- You get a clearer view of the change without slowing down.

This makes AI code generation and AI code reviews steadier and easier to trust, especially when a pull request spans many services. Once teams see that level of clarity inside the review, the old way of working feels noisy and slow.