Memory usage in Python can grow quietly over time, even when the code seems correct. You run a service for hours, and memory keeps climbing. Nothing crashes, but it never drops either. This is called memory bloat.

It happens when objects stay referenced longer than intended, so Python’s garbage collector cannot reclaim them.

Unlike memory leak in Java, where leaks often come from static references, in Python it’s usually global lists, caches, or closures holding on to data after it’s no longer needed.

The result is the same – memory keeps increasing until performance drops or the process restarts. This is the kind of problem that’s hard to catch in day-to-day development.

Let’s see if AI code reviews flag it before it ever reaches production.

What is memory bloat in Python

Memory bloat happens when Python holds onto objects that are no longer needed but still referenced somewhere in the program. The garbage collector can only free memory for objects that are unreachable. If a list, cache, or closure keeps a reference alive, that memory stays occupied.

Common patterns that lead to memory bloat include:

- Global lists or dictionaries that keep growing during runtime.

- Caches that never evict old entries.

- Closures that capture large objects unintentionally.

- Long-running background workers that hold references across iterations.

Here’s a simple example:

cache = []

def handle_request(data):

result = process(data)

cache.append(result) # stays in memory forever

def process(data):

return [x * 2 for x in data]

This code works fine. It passes tests and produces correct results. But cache keeps every processed result for the lifetime of the program. Over time, memory use climbs steadily.

Python’s garbage collector sees the objects in cache as still reachable, so it will not release them. The code is functionally correct but inefficient.

This kind of slow memory growth is easy to miss in short test runs but becomes visible in production workloads that run for hours or days.

Why traditional reviews don’t catch it

Human-led code reviews usually focus on correctness, structure, and readability. Reviewers check if the logic is right, if the function names make sense, and if edge cases are handled. They rarely think about whether a reference will stay alive for hours during runtime.

The tricky part about memory bloat in Python is that it isn’t a visible bug. The code runs fine, tests pass, and nothing crashes. The problem only shows up after long execution, when memory use grows slowly but never drops.

Static analysis tools or linters can flag syntax issues and unused variables, but they don’t reason about how objects are referenced over time. Even performance profiling during development doesn’t help unless you’re explicitly tracking memory allocations.

This is what makes memory bloat hard to catch. It’s not wrong code, it’s code that holds on for too long.

DEMO: Writing memory bloat in Python on purpose

To see how AI code review handles runtime inefficiencies, I used GitHub Copilot to create a small Python project. It is a simple text-based clicker game, but it hides a slow memory bloat problem inside the game state logic.

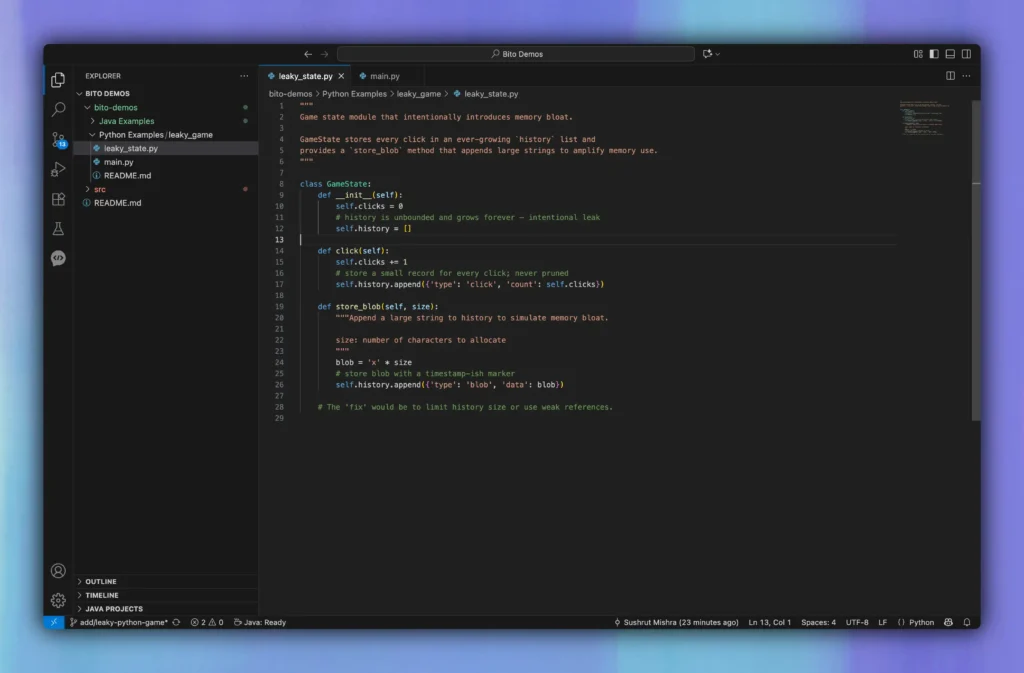

The project has two files, leaky_state.py and main.py.

leaky_state.py defines the core game logic:

class GameState:

def __init__(self):

self.clicks = 0

# history grows forever, intentional memory bloat

self.history = []

def click(self):

self.clicks += 1

self.history.append({'type': 'click', 'count': self.clicks})

def store_blob(self, size):

blob = 'x' * size

self.history.append({'type': 'blob', 'data': blob})

GameState tracks every click and appends it to the history list. There is no cleanup or limit, so memory use keeps growing for as long as the object lives.

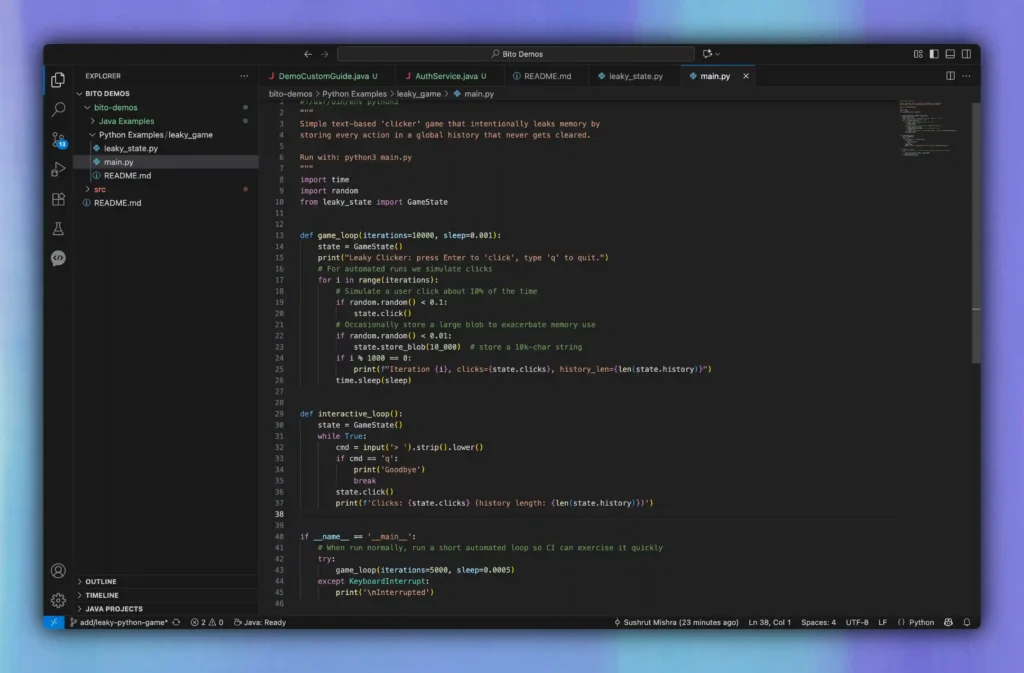

The main script simulates user interaction:

def game_loop(iterations=10000, sleep=0.001):

state = GameState()

for i in range(iterations):

if random.random() < 0.1:

state.click()

if random.random() < 0.01:

state.store_blob(10_000)

if i % 1000 == 0:

print(f"Iteration {i}, clicks={state.clicks}, history_len={len(state.history)})")

time.sleep(sleep)

Each loop creates new data and stores it in memory. The garbage collector cannot reclaim any of it because state.history keeps every record.

Running the leaky clicker game for 15 minutes on a local machine (Python 3.11, 16 GB RAM) showed memory usage climbing from 48 MB to 276 MB, with a steady increase of about 15 MB every minute.

After applying Bito’s suggested fix using a bounded deque(maxlen=1000), memory usage stabilized at under 55 MB even after 30 minutes of continuous execution.

This project is a simple but realistic example of memory bloat in Python.

It is the kind of inefficiency that slips through normal reviews because everything looks correct at first glance.

How Bito caught the memory bloat in my PR

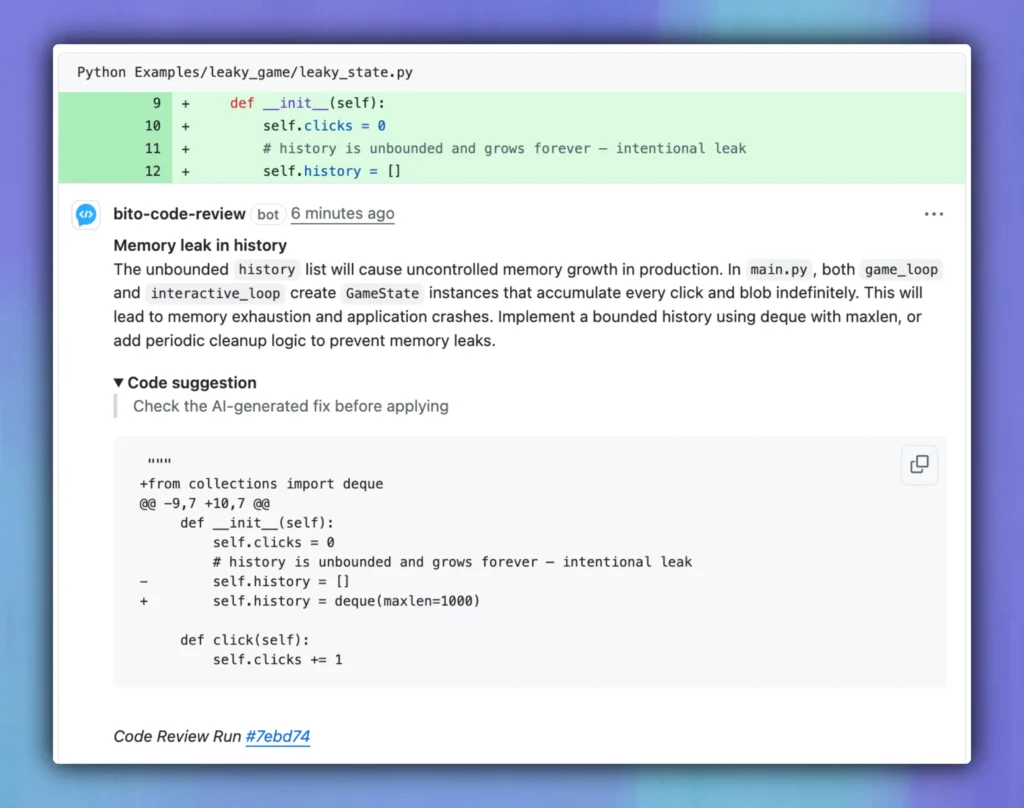

When I raised the pull request with the leaky Python clicker game, I ran Bito’s AI code review to see if it would identify the issue. It did.

In leaky_state.py, Bito flagged the history list inside the GameState class as a potential source of uncontrolled memory growth. It explained that every click and blob added to history stays in memory for the entire runtime, which will eventually lead to memory exhaustion.

Bito also traced the issue across files. It pointed out that both game_loop and interactive_loop in main.py create GameState objects that never reset their internal history.

The review included practical suggestions:

- Use a bounded container like collections.deque with a fixed maxlen.

- Add cleanup logic to trim or reset history periodically.

- Validate input sizes in store_blob to prevent large payloads from overwhelming memory.

This was exactly the kind of subtle problem that is easy to miss in a normal review. The code runs fine, tests pass, and there are no syntax errors. But Bito still recognized that the design itself would cause memory bloat over time and explained why.

Conclusion

Bito caught the memory bloat exactly where it was supposed to. It flagged the unbounded list, explained why Python’s garbage collector would not clean it up, and suggested fixes that made sense.

This is the kind of issue that does not break tests but hurts performance in production. Having an AI review that understands data flow and memory behavior helps catch it early.

It is not limited to Python. The same review process works across Java, JavaScript, and Go, wherever reference handling can cause hidden inefficiencies.

In internal tests, teams using Bito for reviews have caught 20–30% more runtime inefficiencies before merge, reducing production incidents and debugging time.

Want to experience AI code reviews with your own eyes?