I’ve been using AI code review tools for a while now. Most of my work happens inside Bito, which I should mention is also the team I work with. Lately, I’ve been hearing a lot about CodeRabbit, so I decided to give it a spin and see how it stacks up on a real pull request.

We already have a Bito vs CodeRabbit comparison page that breaks down how Bito stacks up against other tools, and a full benchmarking report that goes deeper into accuracy, latency, and language support. But those are more structured and high-level.

This blog is something different. It’s a real-life, developer’s point-of-view. I pushed updates to my Expense Tracker project, opened up a pull request, and let both Bito and CodeRabbit review the same code.

In this blog, I will show you how both tools handle real code reviews. Here is what I found.

Starting up with Bito and CodeRabbit

Before you start using a tool, you set it up. Same with Bito and CodeRabbit. Both are straightforward to get started with. You sign up, connect your GitHub or GitLab or Bitbucket account, approve a few permissions, and you are in.

It takes a couple of clicks and you are ready to run reviews. Exactly how modern dev tools should work.

But you are not here to read about onboarding. You want to know which tool actually helps once the code is pushed and the pull request is open.

Let’s get into that.

AI Code Review: Bito vs CodeRabbit

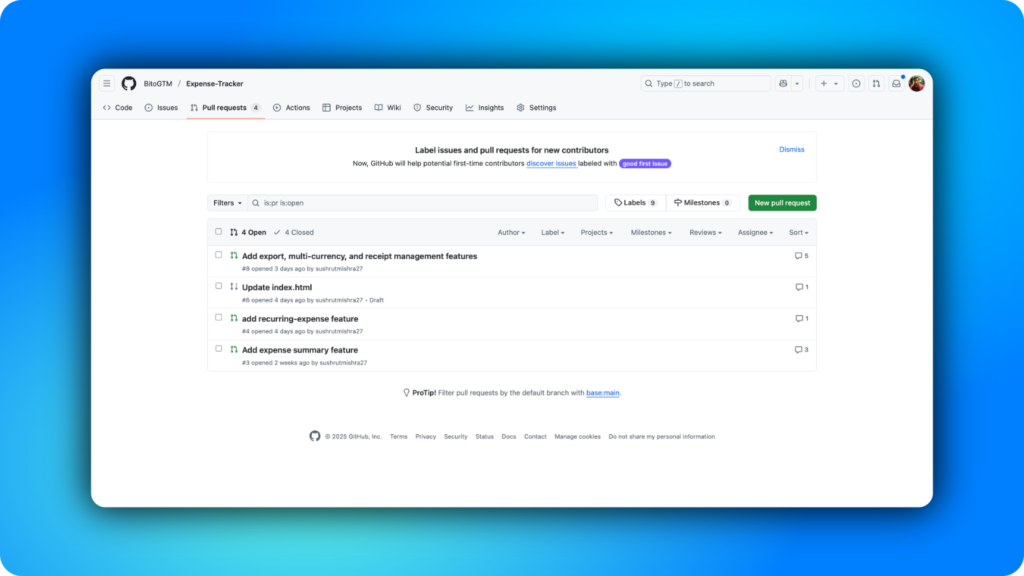

I have a simple Expense Tracker built with vanilla JavaScript, HTML, and CSS that I often use to test new tools. For this comparison:

- I updated the app with category logic, recurring expenses, Chart.js-powered analytics, and budget tracking with alerts.

- I also added new form fields and UI sections in the HTML, and updated the CSS for responsiveness and visual clarity.

Once the changes were in, I opened a pull request and let both Bito and CodeRabbit review the exact same code.

Now, let’s walk through how each tool performed across four areas that actually make a difference when you are reviewing code:

- PR summary and how they set the stage

- Changelist breakdown and clarity

- Review suggestions and how much noise they create

- Overall UI, UX, and developer experience

We will take each of these step by step.

1/ Bito vs. CodeRabbit: PR Summary

The first thing both tools do after you open a pull request is give you a summary of what they think you just pushed. It is a small thing, but it tells you a lot about how each tool approaches code reviews.

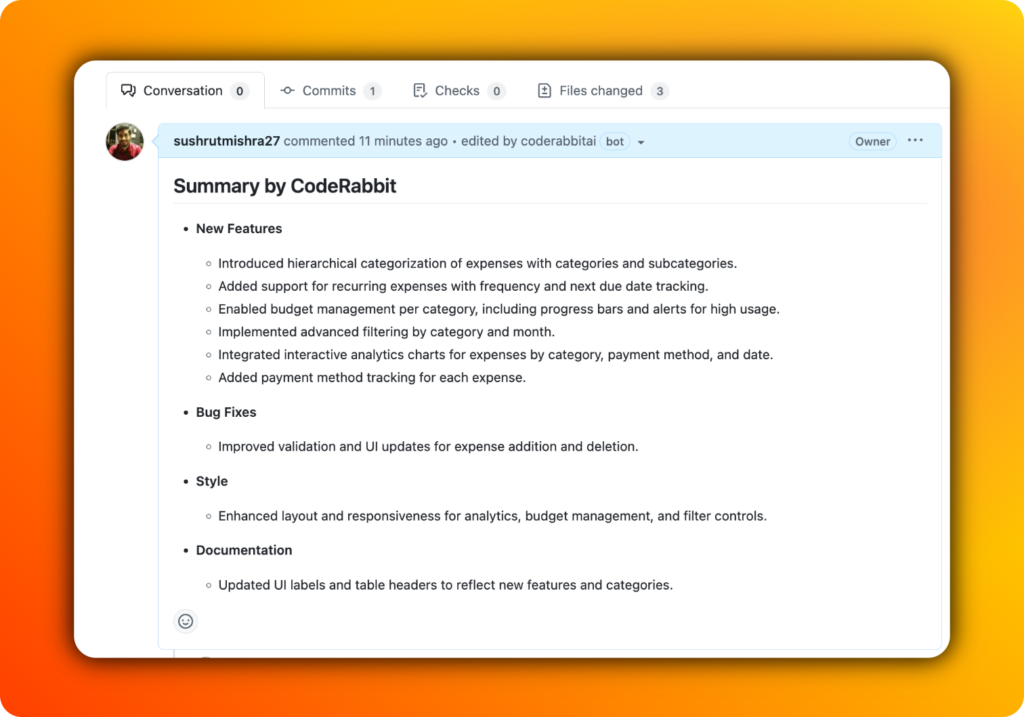

CodeRabbit gave me a well-structured summary. It broke things down into sections like New Features, Bug Fixes, Style, and Documentation. It was easy to scan, it was clean.

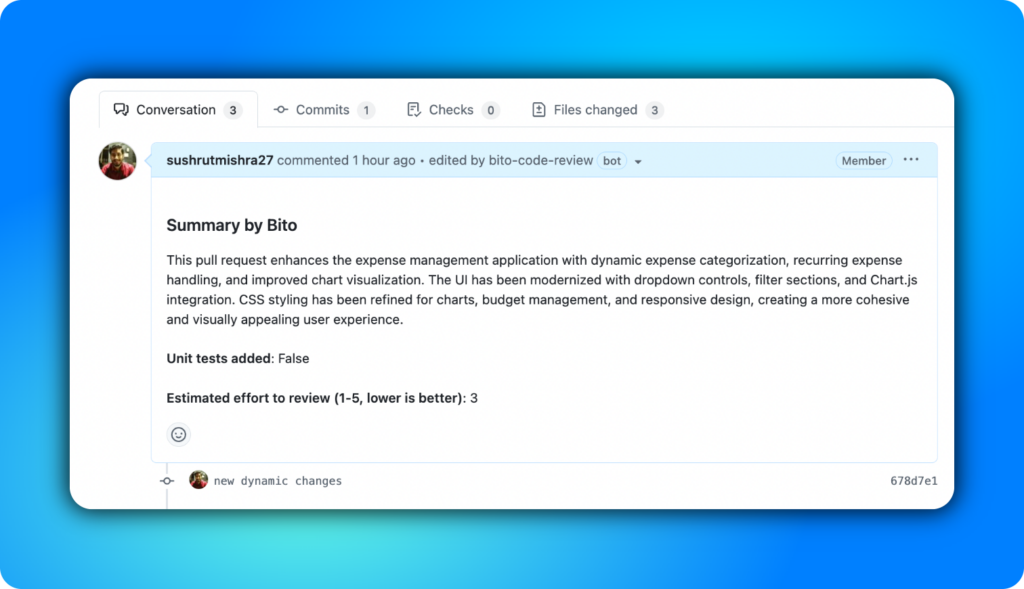

Bito kept it short and focused. Bonus points because it also flagged whether unit tests were added and gave an estimated effort to review the pull request. That is the kind of detail that actually helps when you are managing reviews or working in a team.

If you want something that looks organized on paper, CodeRabbit does the job. But if you prefer a quick, meaningful snapshot that helps you decide how to approach the review, you might like Bito.

2/ Bito vs. CodeRabbit: Changelist

Both tools gave me a breakdown of what changed across files. That’s expected. But how they presented it was very different.

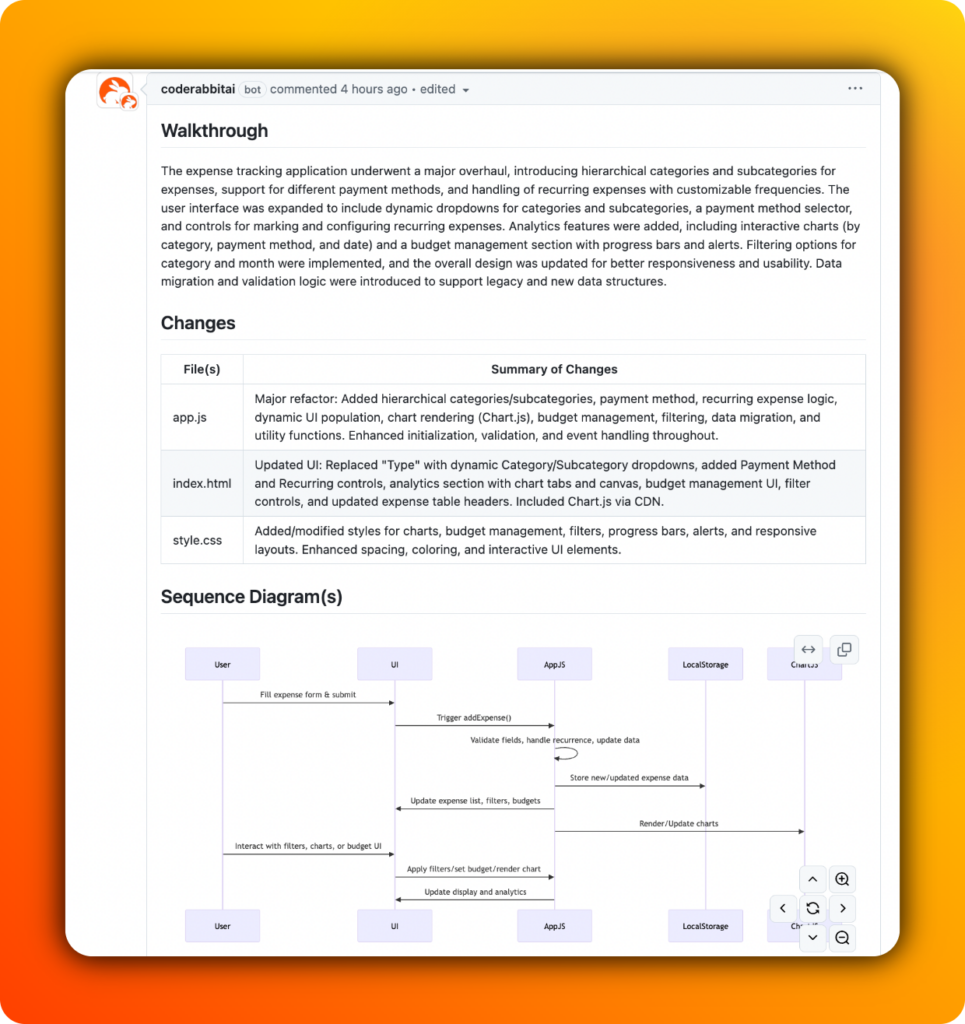

CodeRabbit felt like it wanted to show me everything it could do. It started with a long paragraph, which I didn’t read to be honest. Then it gave me a table, which was fine. But after that, it added a sequence diagram. Looked nice, but didn’t really help in this case.

CodeRabbit also comes up with a poem at the end which would be fun in another scenario. This is not a big issue, you can easily turn it off in repo level settings for CodeRabbit. But there are two issues:

- CodeRabbit has a lot of options in the settings menu. A few of them still unclear to me.

- CodeRabbit offers these settings on a repository level, i.e. if you want to turn off any setting, you’ll have to do repo-by-repo.

I personally am not a fan of that and I don’t need AI to impress me. I want it to save me time. Bito does that in this case, CodeRabbit does not.

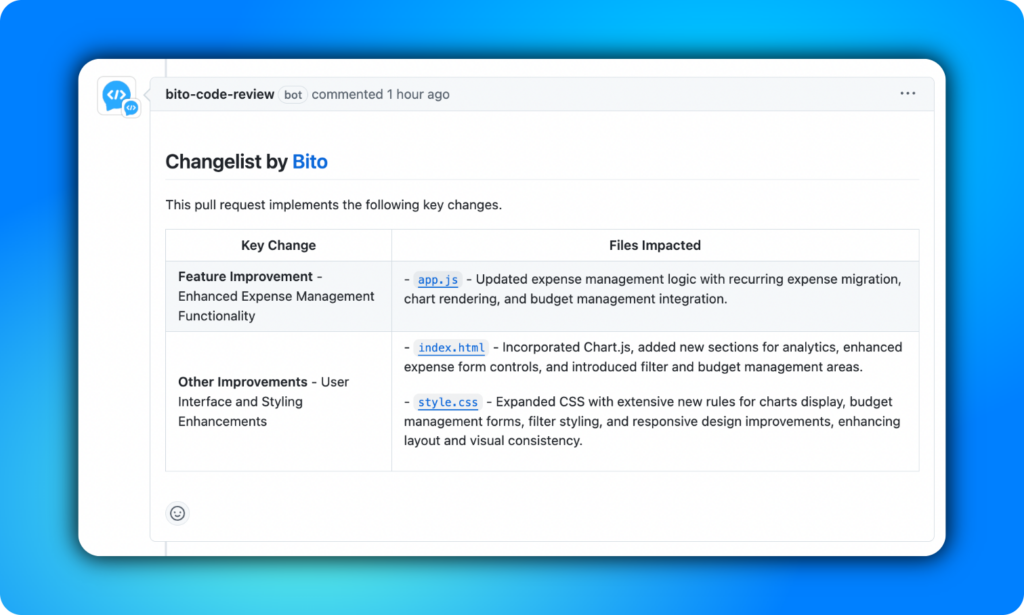

Bito’s changelist was clean and easy to parse. It grouped updates under functional categories like expense management or UI improvements, then listed the exact files touched.

I got a quick overview of what changed and where, without scanning walls of text. When you’re reviewing multiple PRs a day, this clarity saves real time.

3/ Bito vs. CodeRabbit: Reviewing Suggestions

This is where things got interesting. Both Bito and CodeRabbit caught real issues in my code. But they approached the review in very different ways.

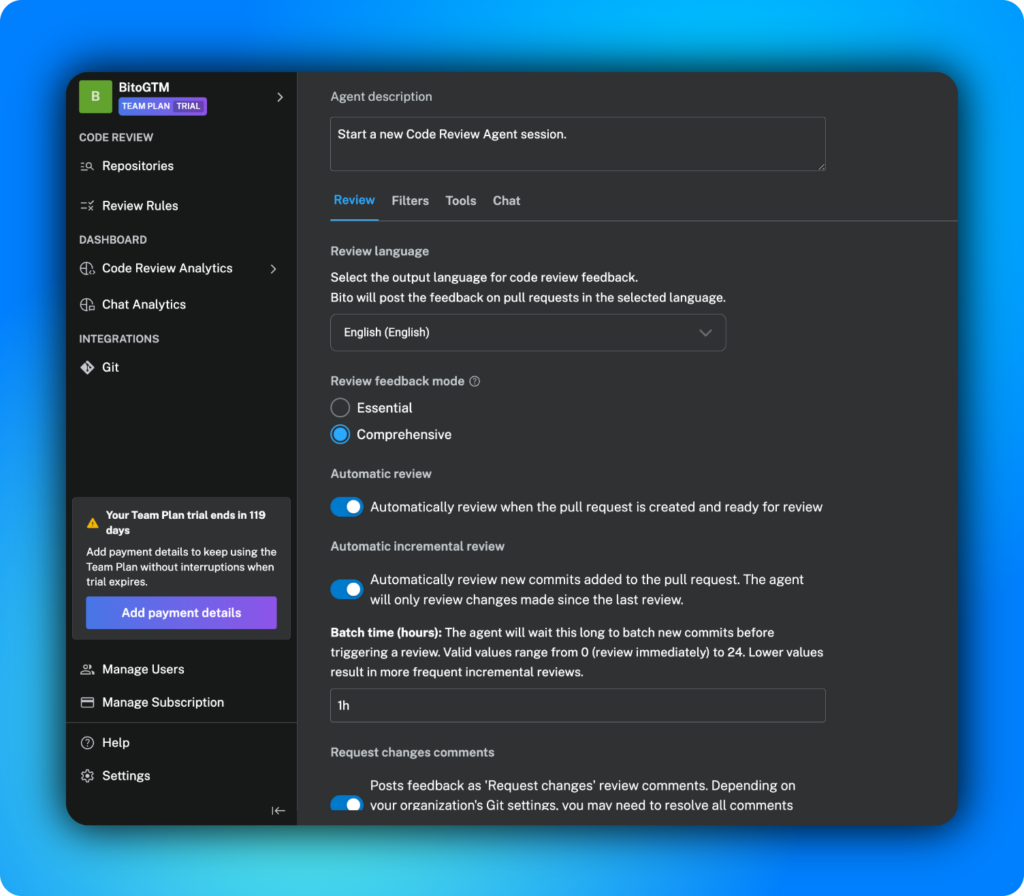

Quick note: Bito lets you choose between two review modes: Essential and Comprehensive. I kept it on Essential Mode for this test because I wanted the AI to flag only the critical or meaningful suggestions, not every possible nitpick. CodeRabbit, by default, seems to lean toward exhaustive reviews.

Now:

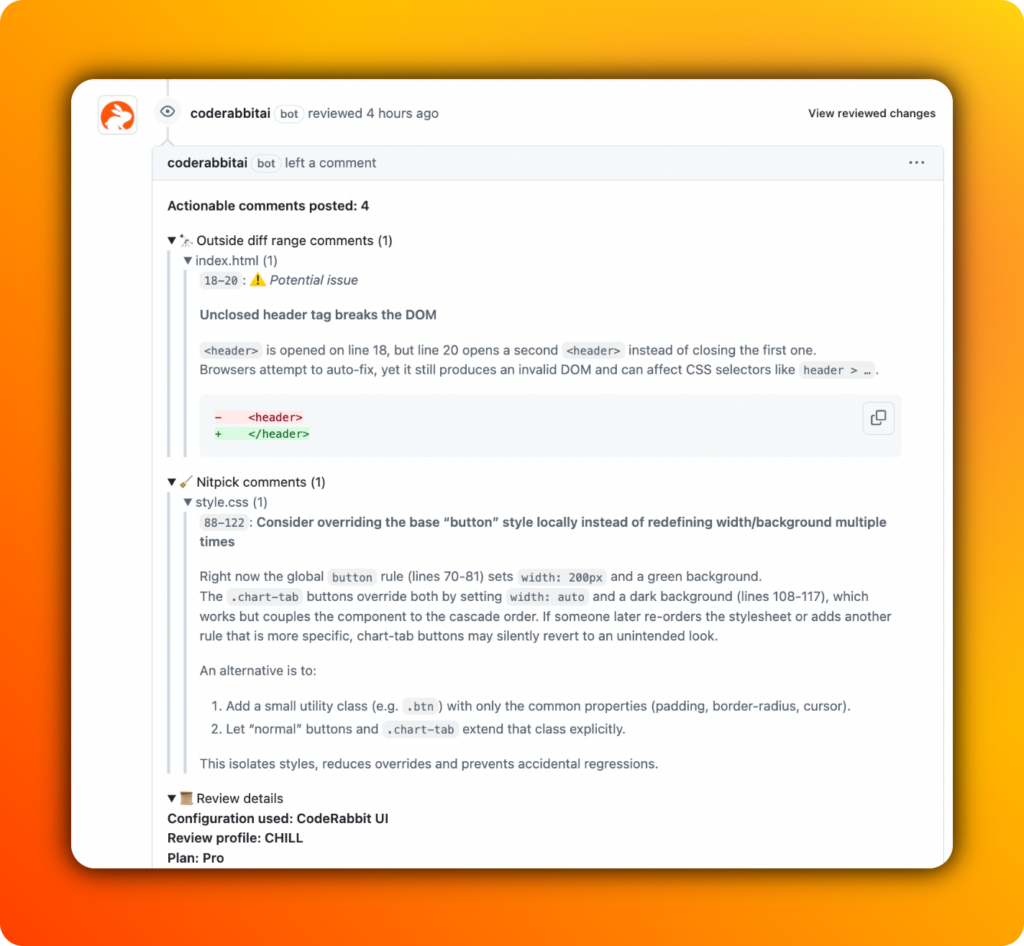

CodeRabbit flagged a lot. Some of it was genuinely helpful. It caught an unclosed <header> tag. This is something easy to miss but important because it can mess with your DOM and CSS selectors.

It also pointed out that I was using <span> elements where I should have used <label> for form accessibility. That’s not something every developer thinks about, neither did I.

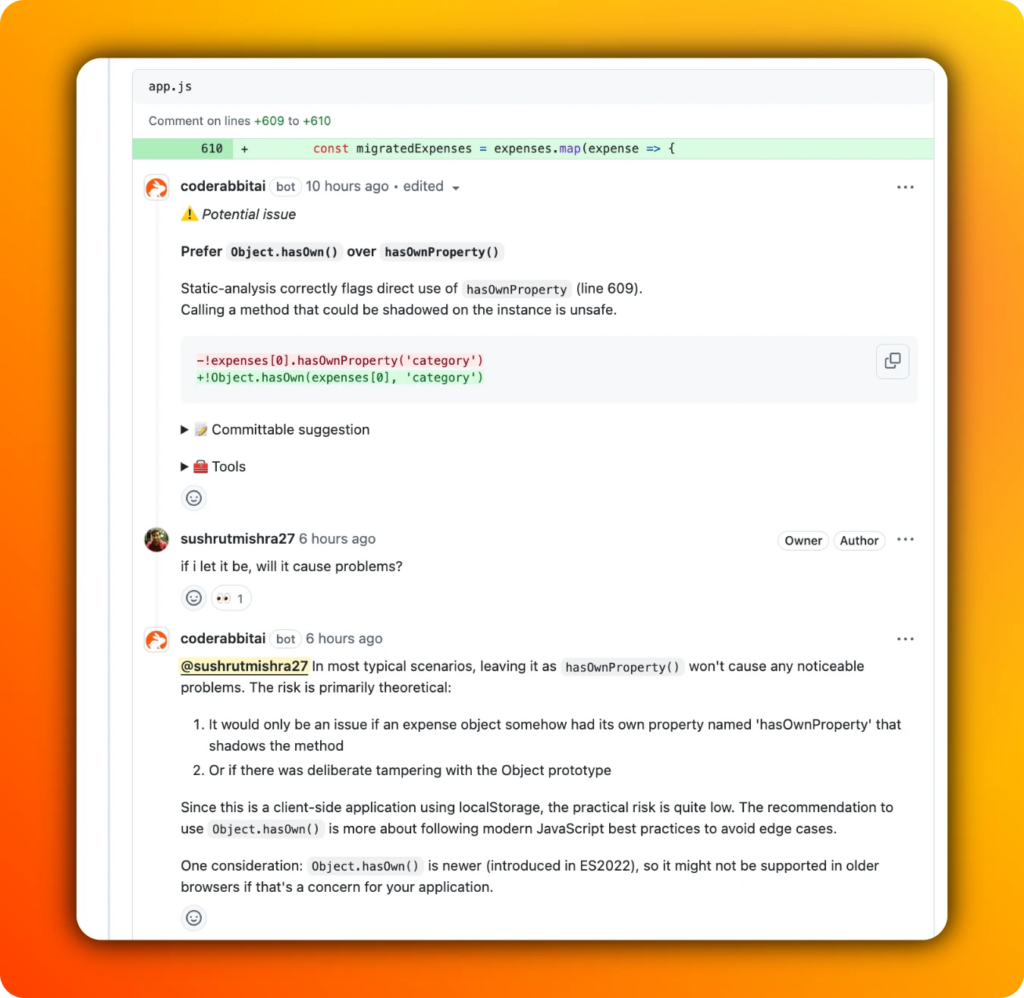

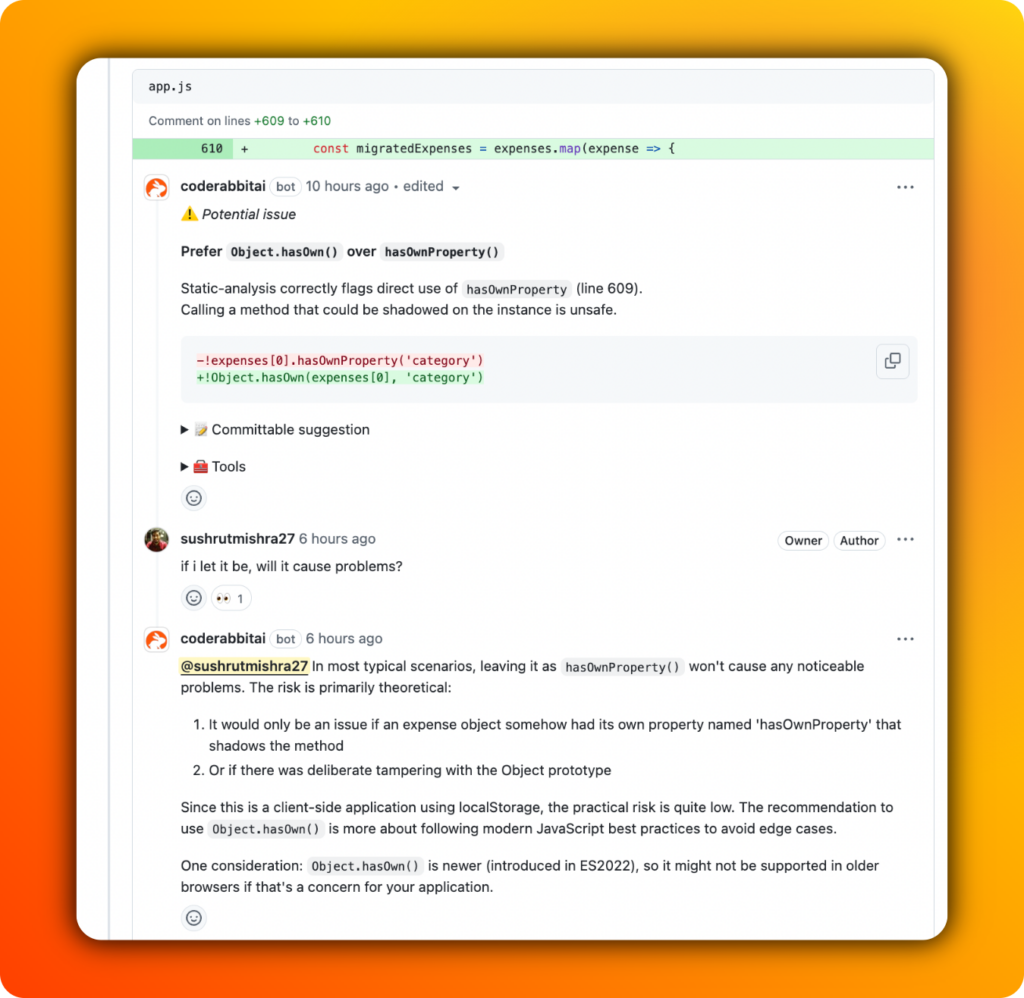

It flagged my CSS overrides and offered a refactor suggestion that, while technically correct, felt unnecessary for a small project like this. It also warned me about using hasOwnProperty(), explaining potential risks that, realistically, wouldn’t apply in this context.

Oh yes, like Bito, CodeRabbit too has chat feature where you can ask questions around the suggestions.

If you like thorough reviews and have time to filter through detailed feedback, CodeRabbit delivers. But in a fast-moving team, this level of verbosity could slow things down.

Now, here’s how Bito handled the same pull request.

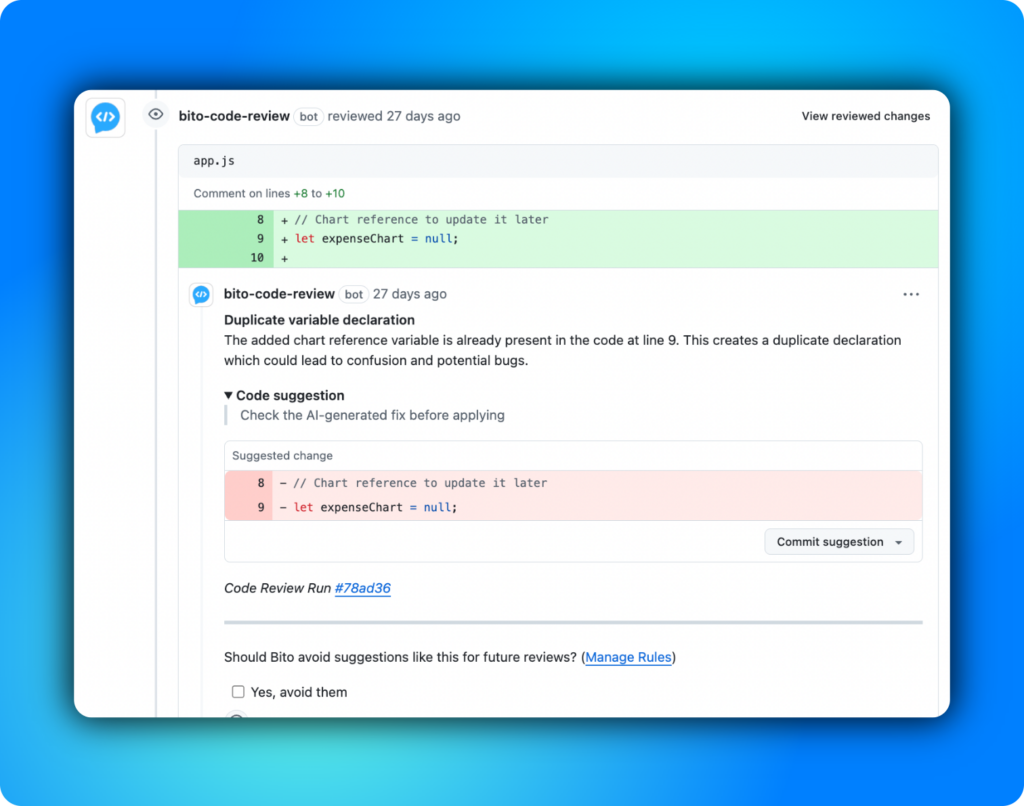

Bito flagged more issues, but the segmentation was very clear and verbosity was low. It called out a duplicate variable declaration — clear, simple, and something that could lead to confusion later.

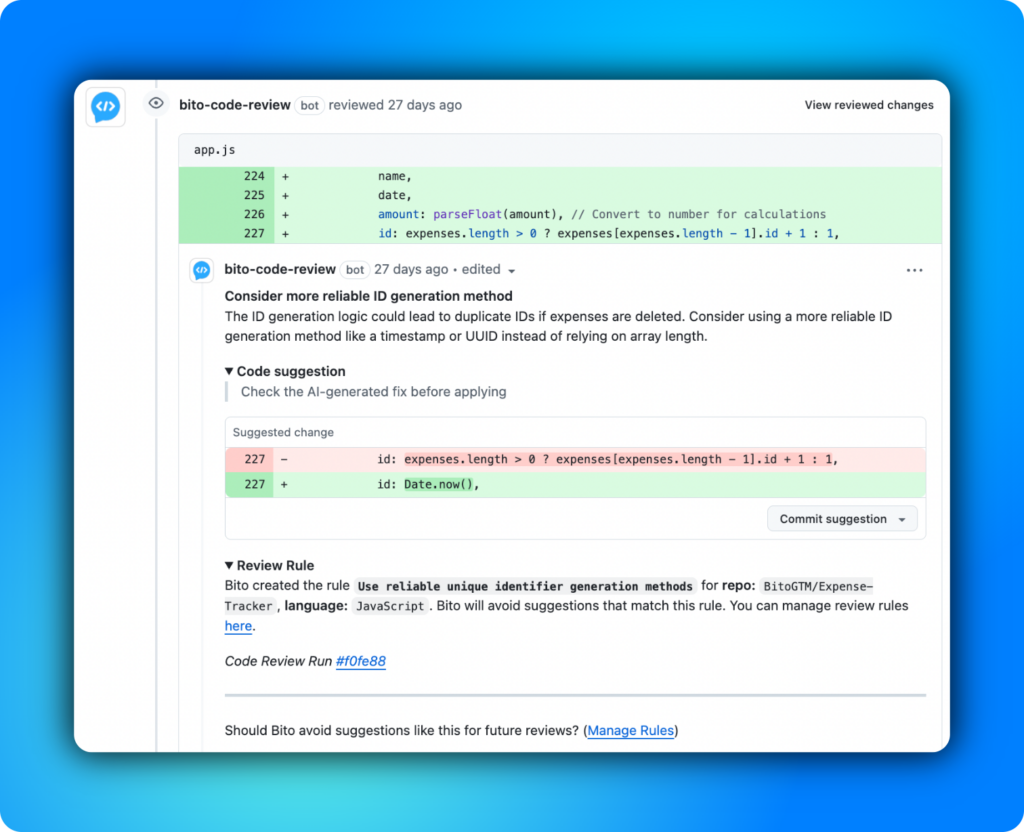

Bito also flagged the ID generation logic and suggested using Date.now() to avoid duplicates if expenses were deleted.

It also highlighted duplicated subcategories in my logic and a small CSS inconsistency that could affect layout.

Where Bito shines is in keeping reviews focused. It doesn’t drown you in minor style points or theoretical risks unless they’re likely to cause real problems.

In short:

- Bito told me what mattered and let me get back to work.

- CodeRabbit told me everything it could find, whether it mattered or not.

If you are running reviews across a team, especially at scale, this difference is huge. Bito helps you move faster without missing critical issues. CodeRabbit gives you a detailed report but leaves you to filter out the noise.

My verdict

- CodeRabbit is detailed. Sometimes too detailed. Great if you’re learning, or if you want a second pair of eyes on everything, but be ready to spend time filtering what’s actionable.

- Bito is streamlined. It allows you to focus on what matters most, which is ideal if you’re working in production environments where speed and clarity are key.

Neither approach is wrong. It depends on how you and your team like to work.

If you’re a junior developer or working solo and want maximum coverage, CodeRabbit has value. If you’re managing teams, shipping features, and care about velocity without missing critical issues, Bito feels more aligned.

4/ Bito vs. CodeRabbit: UI, UX, and developer experience

When you are reviewing code, how feedback is delivered matters just as much as what is being flagged.

CodeRabbit throws a lot at you. Detailed comments, long explanations, diagrams, and poems. It feels more like reading a report than doing a review. The UI gets crowded fast, and I found myself skipping through half of it to find what actually needed action.

Bito keeps things simple. Clear comments, quick explanations, and fixes you can apply without overthinking. It also lets you manage suggestions easily — accept, reject, or turn them into rules. Plus, built-in linting and secret scanning run quietly in the background without adding to the noise.

If you care about getting through reviews without the extra fluff, Bito’s design helps you stay focused. CodeRabbit shows you everything, but not everything is worth your time.

Benchmarking AI code reviews

Everything I’ve shared so far is based on a single pull request and my experience working through it. That’s useful, but it is still just one repo, one review, and my personal workflow.

If you are looking for something more comprehensive than just one PR, we’ve done the work. Bito ran full-scale benchmarks across multiple codebases and languages, testing against top AI code review tools, including CodeRabbit.

See how they stack up across accuracy, latency, and language support in our full benchmark report → Read the Benchmarks

Here are a few numbers that stood out to me.

- Bito covered around 69.5% of issues on average across five languages. CodeRabbit wasn’t far behind at 65.8%. In TypeScript, Bito pushed ahead with 75.3% coverage, while CodeRabbit landed at 72.3%.

- Where things got interesting was high-severity issues. Bito consistently flagged over 75% of critical problems across languages. That is the kind of thing you care about when you’re running production code reviews.

So while my experience leaned towards Bito for focus and speed, the data backs it up too. CodeRabbit does well, but if you are looking for something that balances coverage with signal, Bito is a step ahead.

Again, one PR won’t tell you everything. But when personal experience and benchmarks line up, it is worth paying attention.

Also read: Comprehensive comparison – Bito’s AI Code Review Agent vs CodeRabbit

Naturally, I’m sticking with Bito

I work on the Bito project, afterall. After running both tools on a real project, here’s where I landed. CodeRabbit is thorough, it’s detailed, sometimes too detailed. If you enjoy sifting through every possible suggestion, it will keep you busy.

I don’t want busy. I want fast, clear, and actionable.

Bito gave me exactly that. It flagged what mattered, stayed out of the way when it should, and helped me move through reviews without second-guessing every line.

Bito’s UI is cleaner, the feedback sharper, and features like Custom Code Review Rules, built-in security scans, and multi-language support make it a better fit for working teams.

Want to see how it fits into your workflow? Book a demo and watch it in action, or