AI code reviews vs static analysis — which one actually improves code quality? Creating a pull request is easy, but waiting for a review is unpredictable. Will it be a quick approval, or will it get stuck in formatting debates and minor fixes? You don’t know.

Static analysis tools help by flagging syntax errors, security risks, and style violations. But since they rely on predefined rules, they often miss deeper issues like inefficient logic, performance bottlenecks, and scalability concerns.

AI code reviews go further. By understanding intent, they can detect logical flaws, optimize code structure, and suggest improvements that static analysis tools overlook. This article breaks down both approaches to help you decide when AI-driven reviews provide a real advantage.

Static Code Analysis: How it works and what it offers

Static code analysis (SCA) is often the first line of defence in maintaining code quality. It scans code without executing it, identifying syntax errors, security vulnerabilities, and deviations from coding standards. This method relies on predefined rules and patterns to detect potential issues.

How static code analysis works

SCA tools analyse source code using predefined rules to detect a wide range of issues, including:

- Syntax validation: Identifies missing semicolons, unmatched brackets, or incorrect variable declarations that could lead to compilation errors.

- Security vulnerabilities: Flags risks such as hardcoded credentials, SQL injection threats, or unsafe API usage that could expose the application to attacks.

- Code style and formatting: Enforces coding conventions by checking for consistent indentation, naming conventions, or linting rule adherence.

- Dead code detection: Identifies unused variables, redundant functions, or unreachable statements that add unnecessary complexity to the codebase.

Benefits of static code analysis

SCA provides several advantages, making it an essential part of code review processes:

- Prevents common mistakes: Catches syntax errors or security vulnerabilities before the code is executed, reducing the likelihood of runtime failures.

- Automates code quality checks: Integrates into CI/CD workflows to automate code quality checks and enforce consistency across teams.

- Improves security: Includes vulnerability scanning to detect outdated dependencies and insecure coding patterns.

Limitations of static code analysis

Despite its benefits, SCA has certain limitations that developers should be aware of:

1. Limited contextual understanding: SCA detects rule violations but misses performance bottlenecks and inefficient logic.

def find_duplicates(nums):

duplicates = []

for i in range(len(nums)):

for j in range(i + 1, len(nums)):

if nums[i] == nums[j]:

duplicates.append(nums[i])

return duplicates

This O(n²) algorithm is inefficient for large datasets. Static analysis won’t flag it.

2. High false positive rate: SCA often flags non-issues, leading to ignored warnings.

function processData(input) {

let unusedVar = "This variable is never used";

return input.trim();

}

A linter flags unusedVar, but it doesn’t affect functionality. AI-powered reviews reduce noise by prioritizing real issues.

3. No runtime analysis: SCA doesn’t execute code, so it misses runtime errors caused by dependencies and execution flow.

class UserService {

static User currentUser = null;

static void printUserName() {

System.out.println(currentUser.getName()); // NullPointerException

}

}

Since currentUser is null, this fails at runtime. Static analysis won’t detect it.

While SCA is an essential component of modern development workflows, it operates within rigid rule sets. It is effective at enforcing standards but lacks the ability to interpret intent, analyse complex dependencies, or provide meaningful refactoring suggestions.

This is where AI code reviews offer a more intelligent, adaptive approach to improving code quality.

AI Code Reviews

Static analysis enforces rules, but code quality also depends on logic, performance, and maintainability — factors that rule-based tools struggle to assess.

AI code reviews go beyond predefined checks by providing context-aware insights.Instead of scanning for rule violations, AI understands the intent behind code modifications. It analyses patterns across the repository, detects inefficiencies, and suggests improvements that align with best practices.

This approach helps teams catch issues that static analysis tools might overlook while reducing the time spent on manual reviews.

Large Language Models (How they can reason like a senior developer)

What makes AI code reviews actually useful is how LLMs reason with context. Instead of checking for rule violations in isolation, they look at how a change fits into the bigger picture of your codebase. AI code reviews become valuable when they go beyond surface-level suggestions.

Large language models like the ones powering Bito do exactly that. They analyze code the way an experienced engineer would — by understanding structure, flow, and intent across files and functions.

These models interpret relationships within your codebase. They track how variables move, how functions interact, and how data flows through logic. That context lets them spot inefficiencies, recommend cleaner patterns, or catch inconsistencies that a rule-based linter will miss.

LLMs also adapt. When they’ve seen enough pull requests from your team, they recognize your patterns. They stop flagging non-issues and start reinforcing your standards. Over time, this creates more consistent reviews, fewer back-and-forths, and cleaner merges.

More on how AI code reviews work:

AI-powered reviews combine machine learning, static analysis, and repository-wide context awareness to provide more meaningful feedback. By analysing past code changes, learning from developer inputs, and recognising patterns, AI continuously refines its recommendations.

- Understands repository-wide context: AI analyses the entire codebase to detect logical inconsistencies and redundant implementations.

- Improves performance and scalability: Identifies inefficient algorithms, excessive resource usage, and potential bottlenecks that impact application responsiveness.

- Provides adaptive security analysis: Flags security risks based on usage context to help developers prioritise and fix critical vulnerabilities.

- Learns from past reviews: Recognises team coding patterns and refines suggestions to align with project-specific best practices.

- Generates actionable recommendations: Instead of just pointing out problems, AI suggests concrete fixes with explanations to help developers resolve issues faster.

AI Code Reviews with Bito

A solid example of an AI code review tool is Bito’s AI Code Review Agent. It is designed to improve code quality while reducing review time. It integrates with GitHub, GitLab, and Bitbucket, providing real-time insights that help teams merge code faster and with greater confidence.

Key features include:

- AI that understands your code: Bito brings contextual awareness to each review, offering insights similar to what you’d get from a senior engineer.

- 1 click setup for Git workflows: Supports GitHub, GitHub (Self-Managed), GitLab, GitLab (Self-Managed), and Bitbucket. Azure DevOps support coming soon.

- Pull request summary: A quick, detailed summary gives the reviewer context, defines the type of PR, and estimates the effort to review.

- 1 click to accept suggestions: Pro-quality suggestions and code fixes appear inline. Accept suggestions with one click.

- Changelist: A clear table added directly to pull request comments, summarizing changes and highlighting impacted files.

- Security analysis: Integrates tools like Snyk, Whispers, and detect-secrets to identify vulnerabilities and protect your codebase.

- Custom code review rules: Automatically refines suggestions based on your feedback. Customize reviews by providing team guidelines to enforce best practices.

Learn about the key features in detail here.

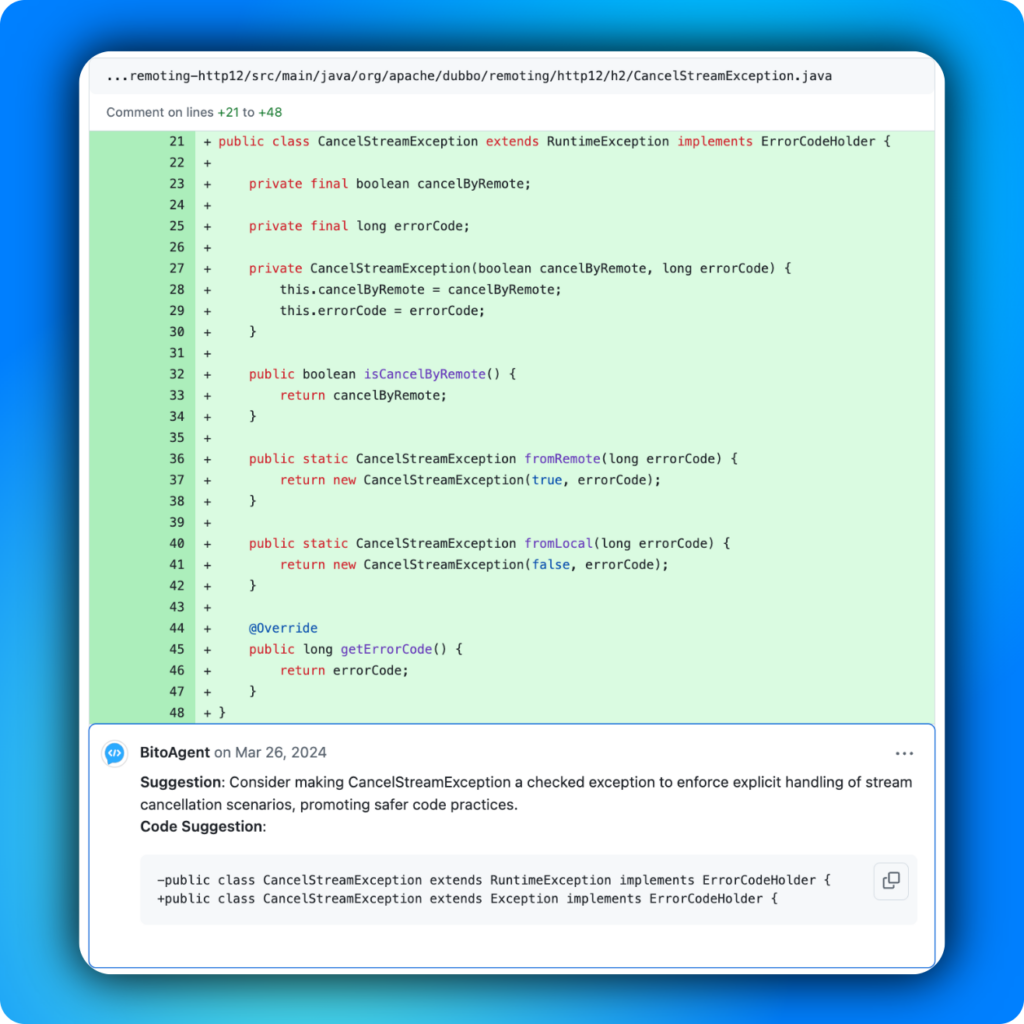

Bito is already helping engineering teams at scale speed up code reviews, catch critical issues early, and maintain coding standards without increasing workload. For example, Bito’s AI Code Review Agent was tested on real-world pull requests from Apache Dubbo and Netty — two widely used open-source projects.

Here are specific instances where Bito identified issues that static analysis tools missed:

- PR Review on Apache Dubbo: Bito flagged a logical inconsistency that a static analysis tool wouldn’t detect.

- PR Review on Netty: AI identified an inefficient operation that could lead to performance degradation.

A snapshot from Apache Dubbo:

These examples highlight how AI-based reviews provide insights beyond traditional static analysis, improving code quality while reducing manual effort.

Why engineering teams use Bito

Bito’s AI Code Review Agent helps engineering teams merge pull requests 89% faster, reduce regressions by 34%, and generate 87% of PR feedback. All while delivering an average $14 return for every $1 spent. It’s built for teams that want fast, reliable reviews without sacrificing code quality.

Thousands of developers use Bito to eliminate bottlenecks in their workflows. The agent provides context-aware suggestions, structured feedback, and smart summaries that help teams ship clean code the first time.

One standout example is Gainsight. At Gainsight, engineers ran Bito on over 1,000 pull requests, covering 184,500 lines of code. Bito flagged 2,660 issues, helped them reduce pull request cycle time by 49%, and delivered suggestions with a 20% acceptance rate.

“Before Bito, merging a pull request could take up to a day due to various issues. Now, it’s streamlined and efficient. Even our senior engineers benefit from Bito’s insights, helping us avoid common mistakes and focus on more complex challenges.”

— Naveen Ithappu, Senior Engineering Leader, Gainsight

Bito brings speed, clarity, and confidence to every review cycle. Teams no longer waste hours on minor fixes or personal preferences — they focus on writing better code.

Read more: Bito case studies

AI Code Reviews vs Static Analysis

So far, we’ve established that static analysis and AI code reviews serve different purposes.

- Static analysis enforces coding standards and catches common issues based on predefined rules. AI-powered reviews, on the other hand, analyse the intent behind code changes, providing deeper insights into performance, scalability, and logic.

- Static analysis reliably identifies syntax errors, security vulnerabilities, and formatting inconsistencies. However, it lacks adaptability and context awareness. AI-powered reviews go beyond pattern matching, understanding how changes impact the overall codebase. They reduce false positives, provide meaningful suggestions, and help teams improve code quality faster.

The table below highlights key differences between the two approaches:

Conclusion

Code reviews balance speed and quality. Static analysis enforces rules, AI speeds up feedback, and human reviewers handle architecture and design. The best teams use all three.

Bito’s AI Code Review Agent fits right in: real-time suggestions, smarter feedback, fewer bottlenecks.

Try AI-powered reviews with a free 14-day trial of the 10X Developer Plan.